The e-learning team has a long term goal of building our testing framework at the same time as writing the specification of a project – something like a test-driven development environment . As a result of being a small team and the way in which some of our projects grow organically this isn’t always possible.

The Sussex import grew out of a proof concept project that wanted to establish that we could import materials between courses without using the expensive backup functionality. It only became a project when we realised it wasn’t only possible it was immanently sensible. As a result we needed to build in our testing framework after we had written the import feature.

We wanted the testing framework to expose changes to the database contents so we were confident the feature wasn’t compromising data integrity. This would compliment our usual testing.

We looked into the inbuilt Moodle unit tests, which in our 1.9 version of Moodle was based on SimpleTest. We thought that the implementation of SimpleTest in Moodle was potentially powerful because it could run multiple tests before a project went live which establish that the changes haven’t broken the functions being tested.

However, we ultimately decided that SimpleTest was unsuitable in this case because the import creates entities in the database. Running this for each Moodle module every time we wanted to run a unit test would be too labour intensive.

Instead we decided to write our own unit tests (loosely described) which logged the results of the tests in the browser console.

The tests would collect data before and after the import and then compare the data returned. The comparison would give the tested pass and fail results.

The comparison looked at a number of data components including:

Course module count

The course module count established that the course modules in the source course stayed the same and the course modules in the target course incremented by one for each asset imported.

Section count

The section count established that the sections in the source course stayed the same and, if the section of the imported asset existed in the target course, the sections in the target course also stayed the same. And, if the section of the imported asset didn’t exist in the target course, the sections in the target course incremented by one.

Assets count

The assets (module) count established that the assets in the source course stayed the same and the assets in the target course incremented by one for each asset imported.

Asset specifics

The asset specifics tested that modules had been correctly copied. The most complicated module was the quiz. The test established that the source course quiz, quiz questions, question answers and question categories all stayed the same and that a new target course quiz is created, with identical quiz questions and answers put into a new category associated with the course.

An example of how the test data helped

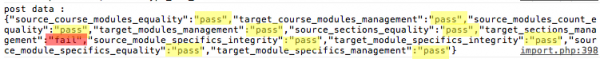

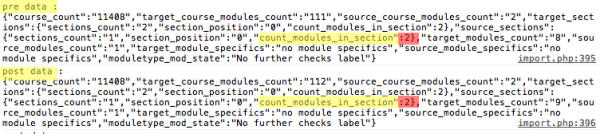

The comparison data would return like this in the browser console:

In the case above it can be seen that there is a problem in the target sections. The details were also stored in the console log so that the programmer could look into the details and see what actually happened.

The details showed that the import did not increment the number of assets added to the section.

The unit tests proved a useful addition to the project allowing us to feel more secure in the fact that the import was not compromising data integrity.

We hope this blog detailing our approach will be useful to others.

As always, your comments welcome!

2 Comments