Description

Register for a free ticket, which includes lunch and refreshments.

Arrive 9:45 for coffee and 10am start.

Research on smart home technology often only includes a limited set of participants in design and evaluation work. Typically, participants are confident with technology, primarily aged 18-30, disproportionately male, and do not have disabilities. It can be hard to recruit a wider and more representative set of participants to take part in design work, but it is essential if technologies are not to be skewed towards the needs of a limited group.

This symposium presents research that deliberately seeks to include a broader range of users in the design of smart home technologies, and includes talks on academic and consumer research.

Schedule:

9:45 – Coffee and pastries

10:15 – Introduction

10:30 – Simone Stumpf, City University of London – Developing a sensor-assisted toolset to improve quality of life for people with early stages of dementia and Parkinson’s

11:00 – Eric Harris, Research Institute for Disabled Consumers – Being smart about ability

11:30 – Coffee

11:45 – Kate Howland, University of Sussex – Designing for end-user programming for the home through voice

12:15 – Charlotte Robinson, University of Sussex – Designing technology to support mobility assistant dogs

12:45 – Lunch

13:45 – Interactive Workshop – Exploring the futures of smart households – Emeline Brulé, University of Sussex

3:00– Coffee

3:15 – Panel: Technology and children’s voices in and beyond the home

- Oussama Metatla, University of Bristol

- Nicola Yuill, University of Sussex

- Seray Ibrahim, University College London

4:15 – Closing discussion

4:30 – End

—

Talk abstracts:

Dr. Simone Stumpf: Developing a sensor-assisted toolset to improve quality of life for people with early stages of dementia and parkinson’s

In this talk I will present our work on the Self-Care Advice, Monitoring, Planning, Intervention (SCAMPI) research project. In this project, we are developing a toolset for people living with early stages of dementia and Parkinson’s disease to monitor and improve their quality of life. The toolset includes several artificial intelligence (AI) components that reason over data (e.g. a computational model of activities and quality of life goals, and activity models using low-cost sensors placed in their own homes to help keep track of activities) as well as a user interface to set up and keep track of a quality of life plan. Our toolset was co-designed with people living with dementia and Parkinson’s, and their informal carers in order to produce effective healthcare technology.

Eric Harris: Being smart about ability

Connected consumer products are increasingly being used to create smart home environments which are configured to the occupants’ needs. What are the opportunities for using this technology to the benefit of people with disabilities? What do people with disabilities think about smart homes and what are the challenges for this consumer market?

This talk will present some first-person views about the technology and show use-case examples. It will also highlight where work still needs to be done to better support people with disabilities.

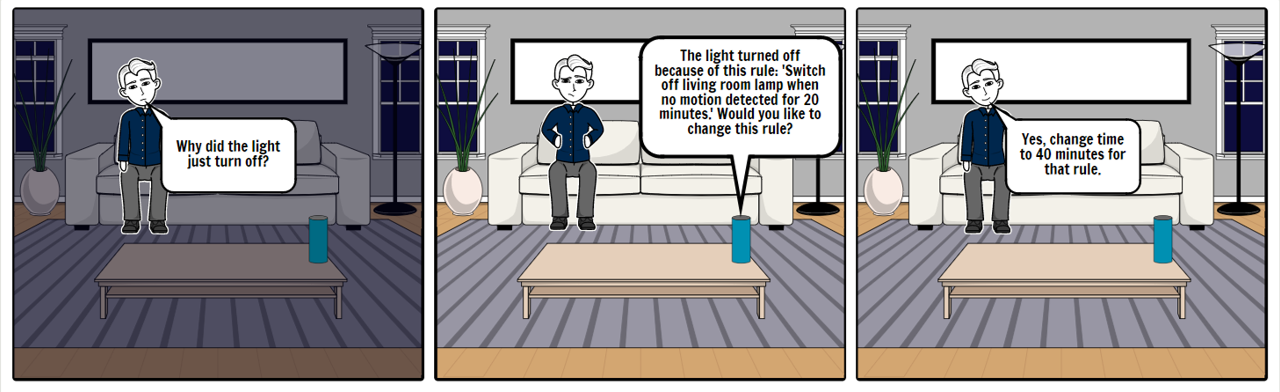

Dr. Kate Howland: Designing voice user interface support for end-user programming in smart homes

This talk will present design-based research on how voice user interfaces (VUIs) can support end-user programming (EUP) tasks in smart home contexts. VUIs, in the form of smart speakers, are one of the most popular smart home control mechanisms, but they provide little support for setting up, querying and changing automated behaviours through voice. We explored the potential for supporting such activities in a mixed-methods domestic design study with 15 participants who had little or no programming experience. We recruited participants who were not typical early adopters and did not self-identify as ‘tech-savvy’. Our participants were in middle to older adulthood and we had a good representation of women, and people with visual and mobility impairments (who are often identified as potential beneficiaries of smart home technology, but are rarely actively involved in smart home design studies). We used semi-structured interviews, Wizard of Oz prototyping and roleplaying to identify opportunities and challenges for using voice interaction to set up and change automated behaviours.

Dr. Charlotte Robinson: Designing technology to support mobility assistant dogs

Assistant dogs are a key intervention to support the autonomy of people with tetraplegia. Previous research on assistive technologies have investigated ways to, ultimately, replace their labour using technology, for instance through the design of smart home environments. However, both the disability studies literature and our interviews suggest there is an immediate need to support these relationships, both in terms of training and bonding. Through a case study of an accessible dog treats dispenser, we investigate a technological intervention responding to these needs, detailing an appropriate design methodology and contributing insights into user requirements and preferences.

Recent Comments