Welcome to the first of, what we anticipate will become, regular (monthly-ish) updates on new developments in AI and Higher Education.

As this is the first such blog post, and one which comes at the end of a summer which has seen some significant developments in the Higher Education sector and UK government response to generative AI, this is something of a bumper edition.

But first, let me update you on developments within Sussex.

What is the Sussex response?

In summary, the University is taking the approach that:

“through innovative, authentic, and appropriate assessment design, along with staff and student education, we can continue to measure attainment through a wide range of assessments.

“We don’t need to revert to in-person exams: this is a great opportunity for the sector to explore new assessment techniques that measure learners on critical thinking, problem solving and reasoning skills rather than essay-writing abilities” (JISC, 2023) ”

UoS Statement on Advances in Technology and Academic Integrity, March 2023

What does this mean in practice?

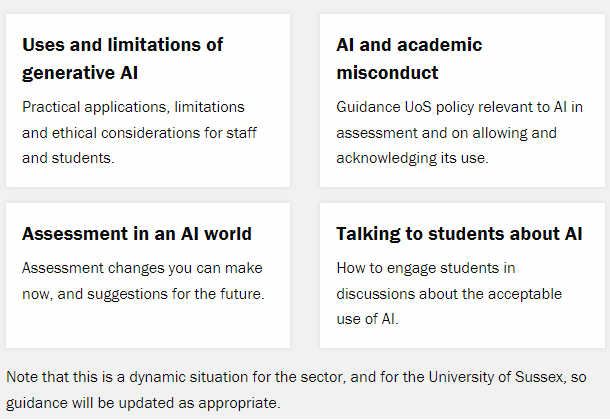

For more information about the University of Sussex response, see the newly updated guidance on the AI in teaching and assessment section of the Educational Enhancement website. In developing these pages, we liaised with colleagues from AQP, Academic Regulations, Skills Hub, Careers & Entrepreneurship, the Library, and with academic colleagues across the University to ensure our message is consistent with the wider institution and reflects sector best practice. The screen shot below shows the links on the main page, which also provides a full list of the support we offer to Sussex colleagues and links to some of our favourite resources elsewhere on the web.

A new ‘Advances in Technology and Academic Integrity Group’ has emerged from these conversations which will feed into the ongoing institutional response to generative AI and more. Also, Educational Enhancement has contributed an update on AI to briefings for module convenors in MAH on academic misconduct (and are happy to do the same for others).

We are also poised to launch an AI Community of Practice for the university. Look out for announcements and/or email EducationalEnhancement@sussex.ac.uk and ask to be added to the mailing list.

We ran, last week, the first of our workshops for staff on ‘Assessment in an AI world’ and have another session planned for Monday 9th October (see ‘What’s on this month’, below for links).

Updates from the HE sector

The Sussex response to generative AI is in-keeping with much of the sector. For example, here are a few headlines from the last few months:

AI detection software dies a quiet death: In June, Open AI, the creators of ChatGPT quietly withdrew their AI detection software due to its low rate of accuracy (poor detection rates and many false positives). In fact, there are no independently validated tools that can reliably and accurately detect generative AI-produced material. This isn’t the only reason we ask colleagues not to use such tools. Doing so means uploading student work to unsupported and unregulated sites. We don’t have their permission to do so and we would just be further feeding the beast.

AI detectors are biased: In July, researchers from Stanford published the results of their research demonstrating that GPT detectors are biased against non-native English writers

Russell Group principles on use of AI in education: Also published in July, the Russell Group’s new set of principles seek to shape institution and course-level work to support the ethical and responsible use of generative AI. The five principles recognise the need for the sector to: support students and staff to become AI-literate; use generative AI tools effectively and appropriately; adapt teaching and assessment to incorporate the ethical and equitable use of generative AI; ensure academic rigour and integrity is upheld; and work collaboratively to share best practice as the technology and its application in education evolves.

Happily, the principle of collaborative working and sharing best practice has been with us from the start. Like us, many universities have been busy updating their guidance for staff and students over the summer, often under a creative commons license, which seeks to build staff and student AI literacy and provide clarity of academic integrity and ethics related to its’ use. See, for example, new guidance published by University College London, Kings College London, Sydney university (the latter also provides a handy summary of a selection more common AI tools and their pros and cons). Examples of great practice are also being shared via a host of international networks and webinars. See our Teaching with AI collaborative padlet and links to upcoming events below….

The QAA response to the DfE consultation on generative AI in education: Published on 7th September, the QAA response makes for a challenging read. They explain how, over time, human-Ai writing will be considered the norm and argue the sector will need to reconsider what it means by ‘plagiarism’ and associated academic misconduct and that the sector may need to re-think or re-establish the baseline level of achievements students are capable of in order to maintain the integrity of the grade classification system. Also, while the Russell group Principles manage to side step the issue of a return to in person exams, the QAA:

“warn against any knee-jerk reactions to the re-design of assessments that see a return to in-person, invigilated exams as the predominant form of assessment, as it has been shown to be a poor reflection of student ability, inaccessible for many with disabilities and additional needs, and not adequately preparing students for the workplace.”

(see the QAA website for guidance on how to address some of these challenges)

What’s on this month

Sign up to join the Educational Enhancement ‘Assessment in an AI world online workshop’, (online) on Monday 9th October, 14:00-15:30.

Join the ‘Empowering Tomorrow: Unleashing Creativity through Generative AI’ conference, being run by the University of Kent, 18th October, 14:00-19:00. Joining links for sessions can be found on the conference programme. See also Kent’s back catalogue Digitally Enhanced Education Webinars, which provide short talks on practical examples of AI in education.

And just missed, but available online – see WonkHE’s session, recorded 28th September, which asks How do students want to learn about AI?

If you are aware of events coming up that aren’t featured here, please contact us or add them to the UoS Teaching with AI Collaborative Padlet.