In this case study, Dr Myrna Hennequin, Lecturer in Economics, shares her strategies for making online quantitative assessments more AI resilient.

What I did

I redesigned the online assessments for the module Quantitative Methods for Business and Economics: Maths (QMBE A). This introductory maths module for Foundation Year students is assessed by several Canvas quizzes. My goal was to create computer-markable exams that are varied, balanced and more resilient against academic misconduct through AI or collusion.

Why I did it

Creating effective online assessments for quantitative modules poses several challenges. With the rapid rise of AI tools such as ChatGPT, I was concerned about cheating through the use of AI. At the same time, timetabling issues implied that the window during which the in-semester tests were available had to be extended from one hour to four hours, increasing the opportunity for collusion.

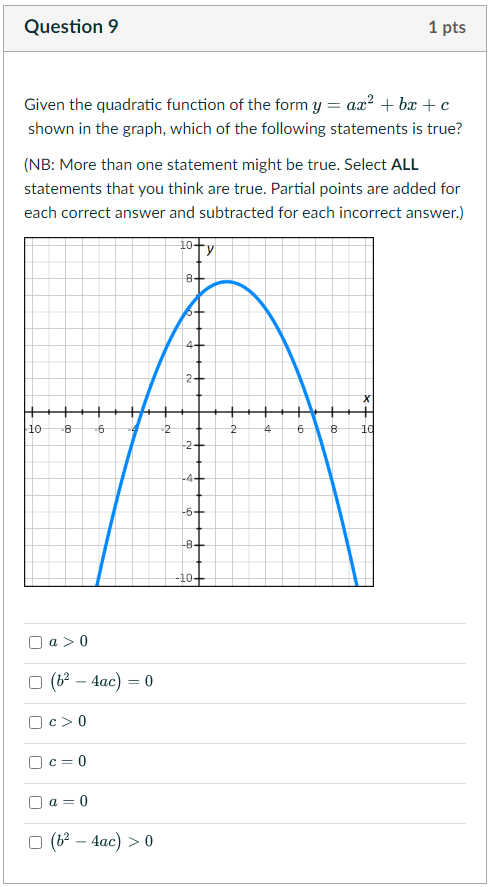

In response to these issues, I aimed to make the quizzes more resilient. As the current free version of ChatGPT (GPT-3.5) does not allow for uploading images, I ensured that each quiz included one or more questions involving graphs.

I also took advantage of Canvas’s built-in maths editor, which puts formulas in LaTeX format. It is currently not possible to directly copy these formulas into ChatGPT, which deters students from quickly copying an entire question including formulas.

Another strategy I use is randomisation. Canvas allows for randomising questions as a whole by using question groups or setting up numerical questions with random numbers using formula questions. To prevent collusion, I ensured that nearly all quiz questions were randomised in some way.

Lastly, I created a varied and balanced exam by using different question types. Even within the MCQ assessment mode, there are many ways to ask questions beyond the standard multiple-choice question consisting of a prompt and a set of answer options. I make use of a range of question types available in Canvas quizzes:

- ‘Formula questions’ are numerical questions with randomised numbers.

- ‘Fill in multiple blanks’ can be used to let students construct a step-by-step solution to a problem.

- ‘Multiple answers’ are multiple-choice questions where there might be more than one correct answer.

- ‘Multiple dropdowns’ are essentially fill-in-the-blank questions with predetermined answer options.

- ‘Matching’ questions ask students to match a series of statements to a fixed set of answer options.

Combining various question types makes it possible to test different aspects of learning and get students to demonstrate depth of knowledge in a computer-markable format.

Challenges

Designing robust quizzes and AI-proofing questions requires time and creativity. It can be time consuming to plan new approaches and to test how well ChatGPT responds to each type of question. However, the Canvas instructor guide proved very useful as it introduced me to the different possibilities within Canvas quizzes.

Impact and student feedback

I always provide practice quizzes containing the same type of questions as the official assessments, so that students know what to expect and can prepare in advance. Students are happy with this combination of summative and formative assessments: on this year’s mid-module evaluation, 21 students (out of 62 respondents) mentioned the practice quizzes and/or the official online tests as a positive point in their written comments.

In terms of results, the distribution of marks for the assessments this year was very much in line with previous years. This suggests that the marks were not inflated by the use of AI tools or other misconduct.

Future plans

While my approach makes the quizzes more robust for the moment, AI tools are rapidly evolving. I would expect AI to get better at dealing with images (e.g. graphs) and mathematical formulas. This will be an additional challenge for the future.

Top 3 Tips

- Think creatively: can you ask your quiz questions in a different way? Consider making use of the various question types available in Canvas quizzes.

- Include images (e.g. graphs) to make it harder to answer questions using AI (*). Use Canvas’s built-in maths editor for any formulas so that they cannot directly be copied.

- Use randomisation to deter collusion. You can randomise questions as a whole by using question groups, or set up numerical questions with random numbers using formula questions.

(*) NB: Don’t forget that some students may have visual impairments and require screen readers. You could try to add alt text to the image in such a way that the quiz question can still be answered, but without giving away too much information.

Thanks Myrna, do you have any update on this, now that students can just screenshot the question and upload to chatGPT 4.o plus which will give the correct answer with complete workings in just a few seconds? It is too late for me to change MCQ mode for 30% this year, but will have to change for next year. Meanwhile, I am showing students how to make the best use of chatGPT 4.o and despite the fact I have a very large bank of questions in randomized order, I anticipate they should all get 100%. The test is more a test of using chatGPT than anything else. Especially since the Centre requires students take it remotely.